There are different ways to quantify statistical significance, so this blog post will discuss the most prominent ways you might find yourself using.

The P Value

There are two ways of quantifying statistical significance: the P value and the confidence interval.

The P value is one of the most misunderstood and misused concepts in statistics. In simple terms, the P value represents the probability that the data would be observed (along with the calculated test statistic), given that the null hypothesis was true.

The P value does not denote clinical significance. It only denotes statistical significance. When conducting a statistical hypothesis test, the researcher is calculating a test statistic and compares that test statistic to a predetermined value. The formulae for the common test statistics all contain the sample size, so no matter what kind of statistic is used, the larger the sample size, the larger the absolute magnitude of the test statistic and the greater the likelihood it will exceed the predetermined value that represents the threshold for rejecting the null hypothesis.

We will now examine an example of the P value. In this example, imagine that I am interested in studying mathematics test scores between school A and school B.

- Assume that I believe that the “truth” is that students from school A are no better at mathematics than students from school B.

- I then administer a math test to a sample of students from both schools, ensuring that the students in the sample are of the same age and had the same grades over the past 2 years.

- The null hypothesis, based on the belief of the “truth,” would be that the mean math test scores of students from each school will be no different.

- I then calculate the average score for each school and note that the mean score for school A is 30% higher than the mean for school B.

-

- At this point, what is the probability that, if students from school A and B are truly equal at taking a math test, that I would be able to take a sample of those students and find mean test scores that are as extreme as what is observed?

- Without doing any formal calculations, it is clear that the probability would be low. If the truth is that the two groups of students are equal at math, it is unlikely that I could take a large sample from each school and end up with mean math test scores as different as what was observed.

5. This probability represents the P value. In this example, the P value would almost certainly end up being very low, most likely less than 0.05.

6. Consequently, I would reject the null hypothesis.

To put it simply, no matter how small the difference is between two groups, the P value can be progressively lowered by increasing the sample size. Almost any difference can be made statistically significant if the sample size is large enough.

Confidence Interval

The second way to quantify statistical significance is the confidence interval. The confidence interval is defined as the X% confidence interval around a parameter (such as a mean or proportion). This interval constructed in such a way that there is an X% probability that it contains the true population value for that parameter.

Typically, population statistics cannot be measured because the researcher never has access to the entire population of interest. However, a confidence interval is typically constructed in the same way no matter what, for it equals the sample parameter +/- the multiplier, multiplied by the standard error of parameter. The multiplier used is a direct function of the level of the confidence interval. If the researcher is constructing a 95% confidence interval, the multiplier will be larger than a 90% confidence interval. Thus, the standard error is inversely proportional to the sample size—the larger the sample size, the smaller the standard error and the narrower the confidence interval.

In the next example, we will examine how I could use the confidence interval:

- First, I typically take a sample.

- From that sample, I calculate the parameter of interest, such as the mean or proportion.

- Imagine that I repeat the sampling procedure 100 times and that, each time I obtain a sample, I construct an interval around the sample parameter of interest. What percent of those 100 intervals would contain the true value of the population parameter that I am estimating? Answering this question is the purpose of the confidence interval.

Association versus Causation

There is a two-step process to carrying out studies and evaluating evidence:

- Determine whether there is an association and that the association is real and not spurious.

- Determine whether that association is likely to be causal.

There are several guidelines to help determine whether an observed association is causal:

- The temporal relationship between exposure and disease.

- The stronger the association (i.e. the higher the relative risk), the more likely the relationship is causal. For example, the presence of a dose-response relationship is strong evidence for a causal association.

- Findings are replicable.

- Biologic plausibility.

- Risk of disease is reduced when exposure to the factor in question is reduced.

- Association is consistent with other knowledge.

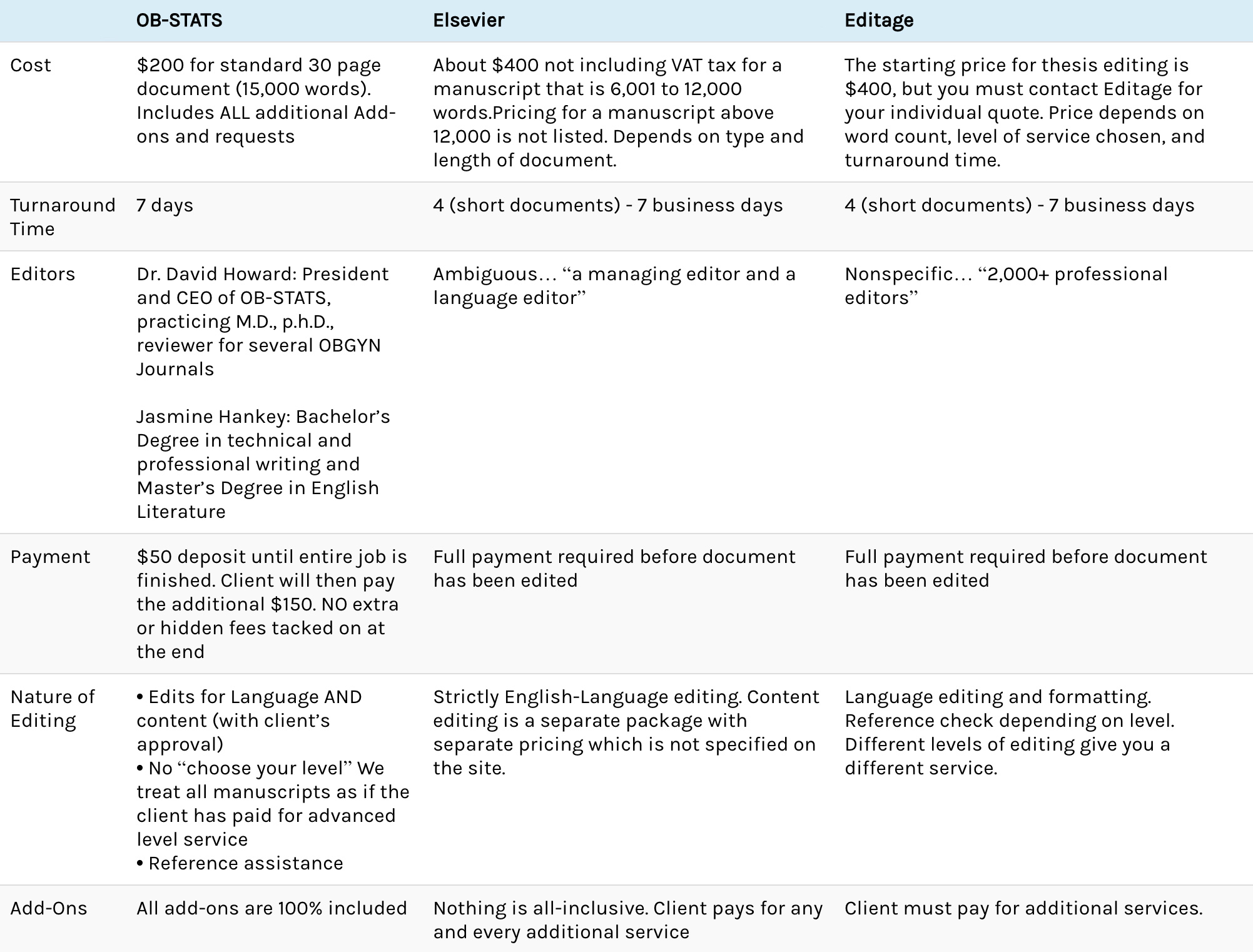

Connect With OB-STATS

Quantifying statistical significance can be a difficult concept. If you need additional help with this concept while conducting a research study, then the OB-STATS course “How To Do A Research Project” can help you. This course can also be purchased for $35.00 if you are interested in earning CME credit. To learn more about the course or to register for it, click below.